Deploying Element on a Genestack Cluster (with Gateway API + Envoy)

The desire for a better chat experience comes from the fact I wanted a modern webUI, push notifications, and the ability to have great experiences on mobile. My primary goal being a fantastic experience when interacting with IRC.

If you have been meaning to run your own Chat without turning your cloud into a collection of half-baked YAMLs this might help make your overall chat experiences from your cloud a little more enjoyable. Below is a reproducible path to deploy the Element Synapse homeserver, Matrix Authentication Service (MAS), and Matrix RTC (SFU) on a Genestack Kubernetes cluster using Helm. This post will result in a fully supportable Element deployment using the Gateway API (Envoy Gateway). Additionally the deployment bundles in Heisenbridge; a bouncer style IRC bridge for Matrix that creates rich experiences for IRC use-cases without needing to rely on external gateways.

I’ll explain the why behind each step, call out the sharp edges, and share a few "day-2" tips so you’re not PagerDuty’s newest pen pal.

Background

I've been running theLounge for a few years and while its fine, it was never great. So I decided today was a great day to create some much needed complication in my life and get on the Element train. The desire for a better chat experience comes from the fact I wanted a modern webUI, push notifications, and the ability to have great experiences on mobile. My primary goal being a fantastic experience when interacting with IRC.

TL;DR

- Create config directories and a

hostnames.yamlfor the Helm chart. - Create the

essnamespace (Element Synapse Stack). - Install the stack via Helm.

- Tweak HAProxy to handle requests.

- Add Gateway API

HTTPRoutes and patch Envoy Gateway listeners for TLS. - Cover troubleshooting and day-2 ops.

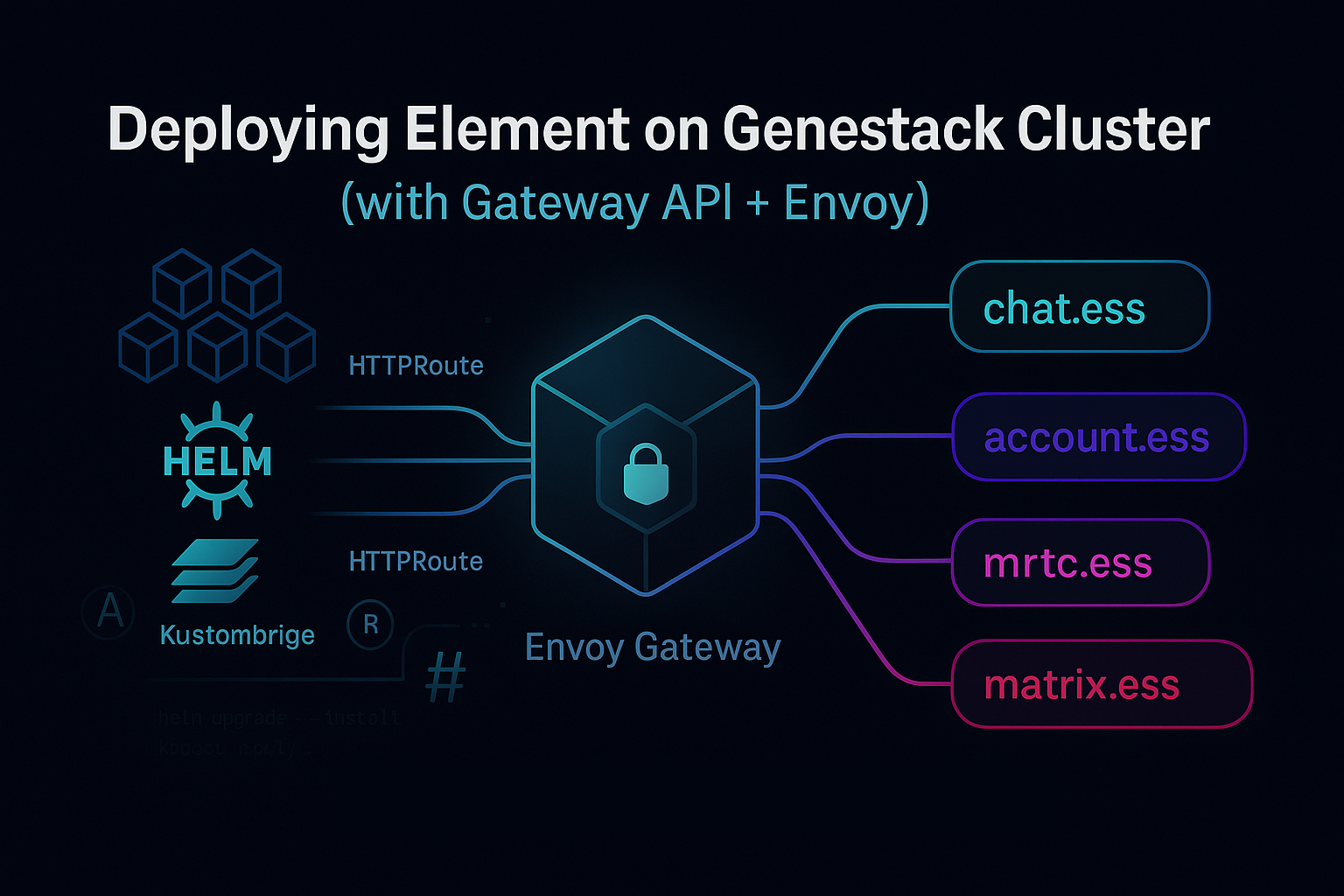

Architecture at a glance

Why Genestack + Element?

Open protocols, open choices

Element gives you a portable real-time comms layer, chat, VoIP/RTC, without vendor lock-in. Genestack is the Rackspace approach to managing Open-Infrastructure, which makes a lot of sense.

Kubernetes-native operations

Genestack brings consistent, declarative deployment patterns and integrates cleanly with Gateway API, so traffic management is first-class. Additionally, the implementation of Helm with Kustomize functionality means I can have a full featured deployment that has the ability to grow in ways never thought possible by the upstream Element chart maintainers.

Day-2 sanity

Helm+Kustomize releases, namespace'd routes, and a single gateway surface make upgrades and rollbacks predictable all while giving me the ability to handle one off implementation details.

Prerequisites (read me before you helm upgrade)

Before running the deployment there are a couple pre-requisites you will need to handle outside of the cluster.

A working Genestack cluster

That's right, we're starting from the premiss that Genestack has been deployed. If you're looking for more information on standing up a Genestack environment, please review the deployment documentation found here.

DNS

Assuming the Genestack Cluster is online, the domain used here should already be configured.

ess.yourdomain.tld(for well-known)chat.ess.yourdomain.tld(Element Web)account.ess.yourdomain.tld(MAS)mrtc.ess.yourdomain.tld(Matrix RTC)matrix.ess.yourdomain.tld(Synapse)

In general this follows the same domain setup requirements you'd find in a typical Genestack deployment. These Domains will be used internally with the Let's Encrypt provider.

TLS (optional)

If you're not using the Let's Encrypt provider, you will need to create some static secrets, which hold your certificate information.

chat-ess-gw-tls-secretaccount-ess-gw-tls-secretmrtc-ess-gw-tls-secretmatrix-ess-gw-tls-secretess-gw-tls-secret(foress.yourdomain.tld)

Storage

A default StorageClass with dynamic provisioning is required. The default name is general (Synapse and friends need PVCs). If you're storage class has a different name you will need to update the helm values file accordingly.

Getting Started

First thing to do is to create the base element directories.

mkdir /etc/genestack/helm-configs/elementThis is where your helm chart overrides live. Keeping overrides in a well-known, version-able path turns “what did we deploy?” from a mystery into a commit.

mkdir -p /etc/genestack/kustomize/element/baseThis is where your Kustomize overrides live. This also ensures you have a well known path for your options.

Helm Values

Create /etc/genestack/helm-configs/element/hostnames.yaml. Update the host values to your domain(s).

---

serverName: ess.yourdomain.tld

elementWeb:

enabled: true

ingress:

host: chat.ess.yourdomain.tld

matrixAuthenticationService:

enabled: true

ingress:

host: account.ess.yourdomain.tld

matrixRTC:

enabled: true

ingress:

host: mrtc.ess.yourdomain.tld

synapse:

enabled: true

ingress:

host: matrix.ess.yourdomain.tld

ingress:

tlsEnabled: false

deploymentMarkers:

enabled: true

wellKnownDelegation:

enabled: trueBe sure to change yourdomain.tld to your actual DNS name.Notes

serverNameis the base server name advertised via `/.well-known` (enabled below).ingress.tlsEnabled: falsehere just avoids the chart wiring its own Ingress TLS; we’re using **Gateway API** with Envoy for TLS termination.wellKnownDelegation.enabled: truepublishes Matrix discovery underhttps://ess.yourdomain.tld/.well-known/..., delegating to your real homeserver hostnames.

Get Kustomiz'ing

Generate the Kustomization file at /etc/genestack/kustomize/element/base/kustomization.yaml. This file is the entrypoint for Kustomize which will be used when we execute the helm install.

Kustomize is used to add Heisenbridge to the Element environment as a builtin sidecar to synapse. By bundling in Heisenbridge, the deployment is ensuring that an IRC gateway is a fundamental part of the Element chat experience, which for me, is the essential goal.

The kustomization.yaml file contents is the following.

sortOptions:

order: fifo

resources:

- namespace.yaml

- all.yaml

images:

- name: heisenbridge

newName: hif1/heisenbridge

newTag: "latest"

- name: yq

newName: ghcr.io/linuxserver/yq

newTag: "latest"

patches:

- target:

kind: ConfigMap

name: ess-synapse

patch: |-

- op: add

path: /data/homeserver-edit-heisenbridge.sh

value: |

#!/bin/bash

set -exo pipefail

chmod 0640 /conf/homeserver.yaml

yq -yi '.app_service_config_files+=["/conf/heisenbridge.yaml"]' /conf/homeserver.yaml

yq -yi ".enable_registration_without_verification=true" /conf/homeserver.yaml

yq -yi ".encryption_enabled_by_default_for_room_type=off" /conf/homeserver.yaml

chmod 0440 /conf/homeserver.yaml

- target:

kind: StatefulSet

name: ess-synapse-main

patch: |-

- op: add

path: /spec/template/spec/volumes/-

value:

name: ess-synapse-config-volume

configMap:

name: ess-synapse

- op: add

path: /spec/template/spec/containers/0/volumeMounts/-

value:

mountPath: /conf/heisenbridge.yaml

name: rendered-config

readOnly: true

subPath: heisenbridge.yaml

- op: add

path: /spec/template/spec/containers/-

value:

command:

- python

- -m

- heisenbridge

- -c

- /conf/heisenbridge.yaml

- --listen-address

- "0.0.0.0"

- --listen-port

- "9898"

- http://localhost:8008

name: heisenbridge

securityContext:

allowPrivilegeEscalation: false

capabilities:

drop:

- ALL

readOnlyRootFilesystem: true

terminationMessagePath: /dev/termination-log

terminationMessagePolicy: File

image: heisenbridge

imagePullPolicy: IfNotPresent

volumeMounts:

- mountPath: /conf

name: rendered-config

resources:

limits:

cpu: "1"

memory: 256Mi

requests:

cpu: 100m

memory: 64Mi

livenessProbe:

tcpSocket:

port: 9898

initialDelaySeconds: 3

periodSeconds: 3

readinessProbe:

httpGet:

path: /health

port: 8008

initialDelaySeconds: 3

periodSeconds: 3

- op: add

path: /spec/template/spec/initContainers/-

value:

command:

- python

- -m

- heisenbridge

- -c

- /conf/heisenbridge.yaml

- --generate

- --listen-address

- 0.0.0.0

name: heisenbridge-generate

securityContext:

allowPrivilegeEscalation: false

capabilities:

drop:

- ALL

readOnlyRootFilesystem: false

terminationMessagePath: /dev/termination-log

terminationMessagePolicy: File

image: heisenbridge

imagePullPolicy: IfNotPresent

volumeMounts:

- mountPath: /conf

name: rendered-config

resources:

limits:

cpu: "1"

memory: 256Mi

requests:

cpu: 100m

memory: 64Mi

- op: add

path: /spec/template/spec/initContainers/-

value:

command:

- bash

- /tmp/run.sh

name: heisenbridge-yq

securityContext:

allowPrivilegeEscalation: false

capabilities:

drop:

- ALL

readOnlyRootFilesystem: false

terminationMessagePath: /dev/termination-log

terminationMessagePolicy: File

image: yq

imagePullPolicy: IfNotPresent

volumeMounts:

- mountPath: /conf

name: rendered-config

- name: ess-synapse-config-volume

mountPath: /tmp/run.sh

subPath: homeserver-edit-heisenbridge.sh

readOnly: true

resources:

limits:

cpu: "1"

memory: 256Mi

requests:

cpu: 100m

memory: 64MiNext is to create the namespace manifest we'll use for the deployment. This file will be created at /etc/genestack/kustomize/element/base/namespace.yaml.

---

apiVersion: v1

kind: Namespace

metadata:

labels:

kubernetes.io/metadata.name: ess

pod-security.kubernetes.io/audit: privileged

pod-security.kubernetes.io/audit-version: latest

pod-security.kubernetes.io/enforce: privileged

pod-security.kubernetes.io/enforce-version: latest

pod-security.kubernetes.io/warn: privileged

pod-security.kubernetes.io/warn-version: latest

name: essInstall the Matrix stack with Helm

With the Helm configuration in place and the Kustomization setup ready, you're ready to deploy. The deployment with helm is simple.

Overview

Run the helm command with the post-renderer arguments, this is done so that the helm command integrates with kustomize, by default, leveraging the same functionality we'd normally see during a typical application deployment.

helm upgrade --install \

--namespace "ess" \

ess oci://ghcr.io/element-hq/ess-helm/matrix-stack \

-f /etc/genestack/helm-configs/element/hostnames.yaml \

--post-renderer /etc/genestack/kustomize/kustomize.sh \

--post-renderer-args element/base \

--waitThis command pulls the matrix-stack chart from GHCR, enables Element Web, Synapse, MAS, and RTC, sets your hosts, runs the resulting helm templates through Kustomize, and waits for resources to become ready.

Once the deployment is online, you can now get setup the environment to integrate with the Gateway API and configure some operational settings which will ensure better system integration for long term deployments.

Add Gateway API routes

Genestack uses the Gateway API to manage routes. So to connect our Element services, we need to create HTTPRoute resources. To begin we create /etc/genestack/gateway-api/routes/custom-element-gateway-route.yaml file which will contain all of the routes required for Element.

--- # Element Web

apiVersion: gateway.networking.k8s.io/v1

kind: HTTPRoute

metadata:

name: custom-chat-ess-gateway-route

namespace: ess

labels:

application: gateway-api

service: HTTPRoute

route: chat-ess

spec:

parentRefs:

- name: flex-gateway

sectionName: chat-ess-https

namespace: envoy-gateway

hostnames:

- "chat.ess.yourdomain.tld"

rules:

- backendRefs:

- name: ess-element-web

port: 80

sessionPersistence:

sessionName: EssChatSession

type: Cookie

absoluteTimeout: 300s

cookieConfig:

lifetimeType: Permanent

---

# Matrix Authentication Service (MAS)

apiVersion: gateway.networking.k8s.io/v1

kind: HTTPRoute

metadata:

name: custom-account-ess-gateway-route

namespace: ess

labels:

application: gateway-api

service: HTTPRoute

route: account-ess

spec:

parentRefs:

- name: flex-gateway

sectionName: account-ess-https

namespace: envoy-gateway

hostnames:

- "account.ess.yourdomain.tld"

rules:

- backendRefs:

- name: ess-matrix-authentication-service

port: 8080

sessionPersistence:

sessionName: EssAccountSession

type: Cookie

absoluteTimeout: 300s

cookieConfig:

lifetimeType: Permanent

---

# Matrix RTC (SFU)

apiVersion: gateway.networking.k8s.io/v1

kind: HTTPRoute

metadata:

name: custom-account-mrtc-gateway-route

namespace: ess

labels:

application: gateway-api

service: HTTPRoute

route: account-mrtc

spec:

parentRefs:

- name: flex-gateway

sectionName: mrtc-ess-https

namespace: envoy-gateway

hostnames:

- "mrtc.ess.yourdomain.tld"

rules:

- backendRefs:

- name: ess-matrix-rtc-sfu

port: 7880

sessionPersistence:

sessionName: EssRTCSession

type: Cookie

absoluteTimeout: 300s

cookieConfig:

lifetimeType: Permanent

---

# Synapse + MAS login paths

apiVersion: gateway.networking.k8s.io/v1

kind: HTTPRoute

metadata:

name: custom-account-matrix-gateway-route

namespace: ess

labels:

application: gateway-api

service: HTTPRoute

route: account-matrix

spec:

parentRefs:

- name: flex-gateway

sectionName: matrix-ess-https

namespace: envoy-gateway

hostnames:

- "matrix.ess.yourdomain.tld"

rules:

- matches:

- path:

type: PathPrefix

value: /_matrix/client/api/v1/login

- path:

type: PathPrefix

value: /_matrix/client/api/v1/refresh

- path:

type: PathPrefix

value: /_matrix/client/api/v1/logout

- path:

type: PathPrefix

value: /_matrix/client/r0/login

- path:

type: PathPrefix

value: /_matrix/client/r0/refresh

- path:

type: PathPrefix

value: /_matrix/client/r0/logout

- path:

type: PathPrefix

value: /_matrix/client/v3/login

- path:

type: PathPrefix

value: /_matrix/client/v3/refresh

- path:

type: PathPrefix

value: /_matrix/client/v3/logout

- path:

type: PathPrefix

value: /_matrix/client/v3/unstable/login

- path:

type: PathPrefix

value: /_matrix/client/v3/unstable/refresh

- path:

type: PathPrefix

value: /_matrix/client/v3/unstable/logout

backendRefs:

- name: ess-matrix-authentication-service

port: 8080

- matches:

- path:

type: PathPrefix

value: /_synapse

- path:

type: PathPrefix

value: /_matrix

backendRefs:

- name: ess-synapse

port: 8008

sessionPersistence:

sessionName: EssMatrixSession

type: Cookie

absoluteTimeout: 300s

cookieConfig:

lifetimeType: Permanent

---

# Well-known publisher (discovery)

apiVersion: gateway.networking.k8s.io/v1

kind: HTTPRoute

metadata:

name: custom-well-known-gateway-route

namespace: ess

labels:

application: gateway-api

service: HTTPRoute

route: well-known

spec:

parentRefs:

- name: flex-gateway

sectionName: well-known-https

namespace: envoy-gateway

hostnames:

- "ess.yourdomain.tld"

rules:

- backendRefs:

- name: ess-well-known

port: 8010

sessionPersistence:

sessionName: WellKnownSession

type: Cookie

absoluteTimeout: 300s

cookieConfig:

lifetimeType: PermanentWith the file created, we apply it to the environment.

kubectl apply -f /etc/genestack/gateway-api/routes/custom-element-gateway-route.yamlPatch Envoy Gateway listeners for the new hosts

To get our Gateway API serving the element routes, we have to patch the listeners.

Generate a listeners patch and save it at /etc/genestack/gateway-api/listeners/element-https.json.

[

{

"op": "add",

"path": "/spec/listeners/-",

"value": {

"name": "chat-ess-https",

"port": 443,

"protocol": "HTTPS",

"hostname": "chat.ess.yourdomain.tld",

"allowedRoutes": { "namespaces": { "from": "All" } },

"tls": {

"certificateRefs": [ { "group": "", "kind": "Secret", "name": "chat-ess-gw-tls-secret" } ],

"mode": "Terminate"

}

}

},

{

"op": "add",

"path": "/spec/listeners/-",

"value": {

"name": "account-ess-https",

"port": 443,

"protocol": "HTTPS",

"hostname": "account.ess.yourdomain.tld",

"allowedRoutes": { "namespaces": { "from": "All" } },

"tls": {

"certificateRefs": [ { "group": "", "kind": "Secret", "name": "account-ess-gw-tls-secret" } ],

"mode": "Terminate"

}

}

},

{

"op": "add",

"path": "/spec/listeners/-",

"value": {

"name": "mrtc-ess-https",

"port": 443,

"protocol": "HTTPS",

"hostname": "mrtc.ess.yourdomain.tld",

"allowedRoutes": { "namespaces": { "from": "All" } },

"tls": {

"certificateRefs": [ { "group": "", "kind": "Secret", "name": "mrtc-ess-gw-tls-secret" } ],

"mode": "Terminate"

}

}

},

{

"op": "add",

"path": "/spec/listeners/-",

"value": {

"name": "matrix-ess-https",

"port": 443,

"protocol": "HTTPS",

"hostname": "matrix.ess.yourdomain.tld",

"allowedRoutes": { "namespaces": { "from": "All" } },

"tls": {

"certificateRefs": [ { "group": "", "kind": "Secret", "name": "matrix-ess-gw-tls-secret" } ],

"mode": "Terminate"

}

}

},

{

"op": "add",

"path": "/spec/listeners/-",

"value": {

"name": "well-known-https",

"port": 443,

"protocol": "HTTPS",

"hostname": "ess.yourdomain.tld",

"allowedRoutes": { "namespaces": { "from": "All" } },

"tls": {

"certificateRefs": [ { "group": "", "kind": "Secret", "name": "ess-gw-tls-secret" } ],

"mode": "Terminate"

}

}

}

]With the patch file in place, patch the Gateway.

kubectl patch -n envoy-gateway gateway flex-gateway \

--type='json' \

--patch="$(jq -s 'flatten | .' /etc/genestack/gateway-api/listeners/element-https.json)"Operations

Once these two files are created and applied, future updates to the gateway will keep these listeners as part of the spec. This means even when deploying updates to the Genestack environment, the patch file will respect the larger cloud platform and ensure compatibility.

Within a couple minutes, the environment will have all of the certificate information generated and will be navigable.

Quick health checks

Pods and services

kubectl -n ess get pods,svcThis check should return all the pods within the deployment and validate that they're running.

Routes accepted by the gateway

kubectl -n ess get httproute

kubectl -n envoy-gateway get gateway flex-gateway -o yaml | yq '.spec.listeners[].hostname' | grep essThis check will show all of the HTTP routes and the gateway.

Well-known discovery

curl -s https://ess.yourdomain.tld/.well-known/matrix/client | jq

curl -s https://ess.yourdomain.tld/.well-known/matrix/server | jqThese checks will use cURL to validate that the client and server systems are running.

MAS login path probe (should 200/302 appropriately)

curl -I https://matrix.ess.yourdomain.tld/_matrix/client/v3/loginOnce again we'll use cURL to validate that the login system is responding.

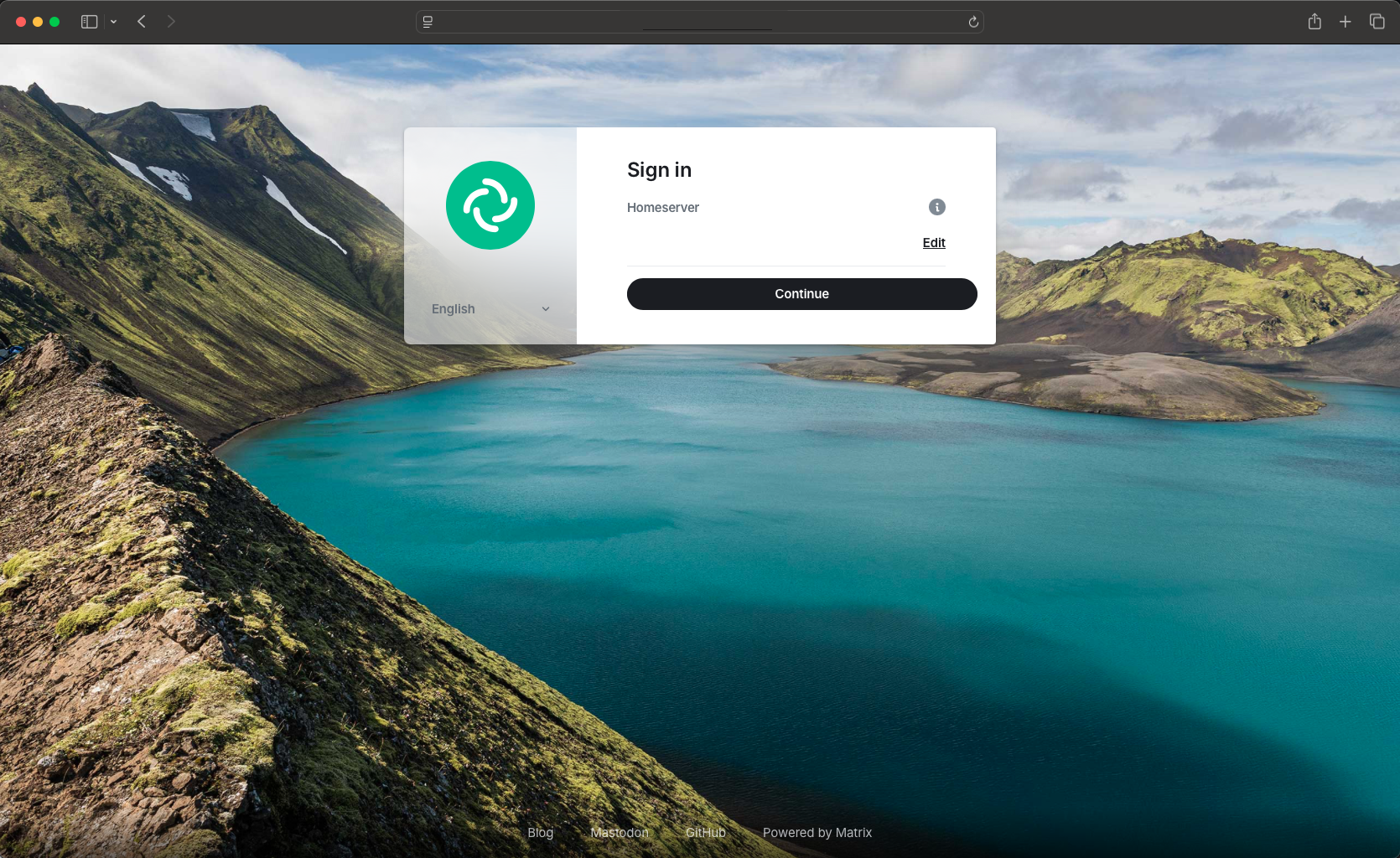

Browser login

Finally validate that you're able to get to the webUI by navigating to https://chat.ess.yourdomain.tld in your browser.

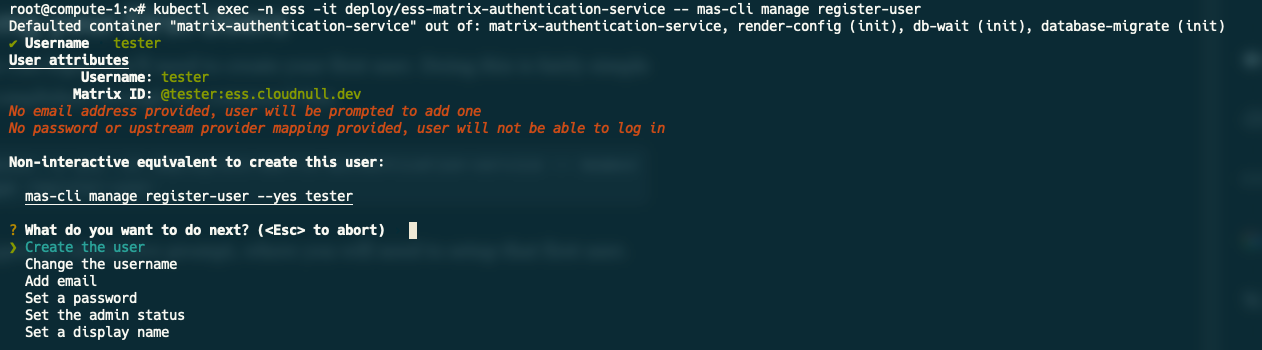

Creating your First Users

Before you can login, you'll need to create your first user. Doing this is fairly simple and is accomplished through the command-line.

kubectl exec -n ess -it deploy/ess-matrix-authentication-service -- mas-cli manage register-user

This will open an interactive prompt, where you will need to setup that first user.

Within this prompt, make sure to set a password, email, admin status, and display name before running "Create the user." Once this user is online, return to the webUI, and proceed with your initial login.

Troubleshooting (aka things I learned so you don’t have to)

404 on /.well-known/matrix/*

Check the custom-well-known-gateway-route and the ess-well-known service/pod. Also confirm the listener well-known-https exists and your ess-gw-tls-secret is valid.

Element loads, but login fails

MAS routes likely aren’t matching. Re-check the `custom-account-matrix-gateway-route` path prefixes and ensure MAS is healthy.

kubectl -n ess logs deploy/ess-matrix-authentication-service | tail -n 100RTC calls connect, then drop

Verify the mrtc.ess.yourdomain.tld listener and route; confirm SFU service ess-matrix-rtc-sfu:7880 is reachable and any required UDP/TCP ports are allowed by your cloud/LB. Some SFUs also require explicit public address config when behind NAT.

Random 413/5xx during uploads

You might need to set no strict-limits in HAProxy. Double-check the configmap and restart the haproxy pods.

Adding the following configuration to HAProxy is straight forward. Simply edit the configuration map and restart the services.

kubectl -n ess edit configmaps ess-haproxyThe no strict-limits option is required to allow the haproxy to handle large requests, which is necessary for Element to function properly. The value will look something like this

global

no strict-limitsNow roll the deployment to pick up the changes.

kubectl -n ess rollout restart deployment ess-haproxyWhile the deployment should work out of the box, there's a chance that HAproxy will fail to start due to file descriptor limits. To ensure this does not happen, edit the HAproxy configmap, adding the option `no strict-limits` to the **global** section.

Why this matters

larger payloads show up during file uploads, media, encrypted attachments, and some auth flows. Without this, you’ll chase weird 413s/5xx.

TLS oddities

Confirm each listener references the correct secret name and each secret contains the right cert/key for that hostname. SAN mismatches will cause head-scratching browser errors.

Day-2 Operations

Upgrades

Because Installs are really just special case upgrades, running upgrades in the environment is simple, and the use of kustomize will ensure that we're not losing anything along the way.

helm upgrade --install \

--namespace "ess" \

ess oci://ghcr.io/element-hq/ess-helm/matrix-stack \

-f /etc/genestack/helm-configs/element/hostnames.yaml \

--post-renderer /etc/genestack/kustomize/kustomize.sh \

--post-renderer-args element/base \

--waitRollbacks

In the envent of a failure to deploy or upgrade, we can always use helm to rollback our deployment.

helm -n ess history ess

helm -n ess rollback ess <REVISION>Backups

Snapshot PVCs for Synapse data (database + media store). If you’re using an external DB (recommended for production), take proper DB backups and test restores. Another neat feature of running within a Genestack environment is the fact that Genestack generally uses Longhorn for PVC storage, which means that snapshoting and backups are generally handled, and can be easily extended as needed.

Keep /etc/genestack/* (values, routes, listeners) in Git. GitOps saves weekends.

Security hygiene

- Set proper

Content-Security-Policyon Element if you embed it in other apps. - If you enable SSO/OIDC via MAS, rotate client secrets regularly and set short-lived cookies with secure flags.

Closing Thoughts

Running Element/Matrix on Genestack gives you an enterprise-grade, Kubernetes-native comms stack that you actually control. With Gateway API in the mix, your routing logic is declarative, audited, and easy to evolve. When in doubt, helm rollback before you invent a new incident.

If you use this setup, or improve on it, share your tweaks. Someone else’s 2 AM will thank you.